Late Adopter

I’ve gotten in the habit of taking a little time at the beginning of every year for general maintenance of the machines which I’ve written about several times. I’ve been reading a lot of other opinions on some various technologies recently and I’ve really started to consider why people decide to publishing anything. I’m really having to dig to sort the information from those who have lots of experience and enthusiasm from those who are either getting paid or trying to get paid in the form of self promotion by trying to show off their ‘knowledge’. There’s a lot of bad knowledge out there from the later and sometimes it gives me imposter syndrome as to why I’m publishing anything. Even though I tend to respect the opinions and efforts others, I generally try not to form my own opinion until I’ve had my own experiences by digging through it. As a kid, I liked to disassemble my electronics and either try and put them back together or, as was often the case, leave them in permanent states of disrepair.

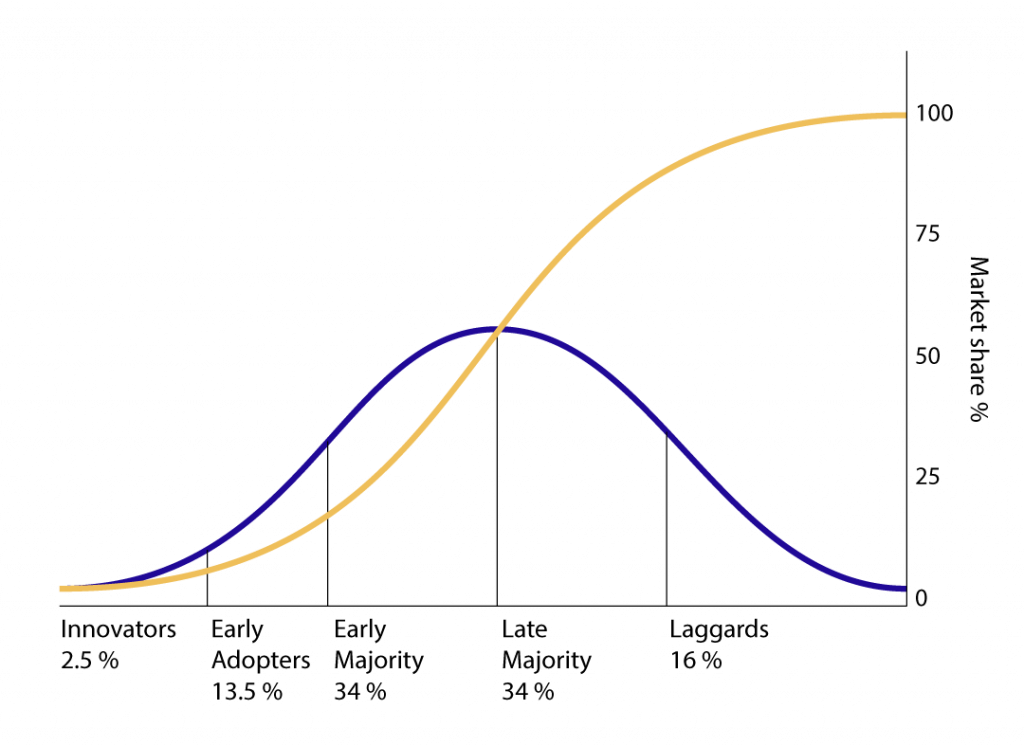

I like to tell folks I’m a late adopter. Let everyone one else be the guinea pigs. Sometimes I take it to the extreme like how I refuse to upgrade my smart phone until it’s absolutely dead. I’m currently six years behind on an iPhone 5 and I still use my original version 1 in a nightstand alarm clock. The book Diffusion of Innovations1 was first published in 1962 and first described the process by which an innovation is communicated over time among the participants in a social system. According to that theory, I’d fall into the 34% ‘late majority’ right at the midpoint of the distribution curb. This could explain why my brother liked to call me a poser2 when we first got into skateboarding or how he insisted that I immediately move to Ruby on Rails3 version 1 in 2005 when we first started web development. I’ve found that I’m very much OK being right in the middle of the distribution curve for most anything. It’s comfortable. This may seam counterintuitive for me considering that I’m often responsible for making technology recommendations, but I’ve found that it’s an effective strategy to save money, time, and labor.

While running the annual updates on the machines I use for development, I’ve noticed how much less work was involved on the machines that aren’t configured manually and rely on Homebrew or Docker to create the development environments. With Homebrew4 and Docker5 I’m putting in three commands to spin up entire development environments, whereas I was previously spending countless hours of configuring the system manually and ‘googling’ through every error on Stack Overflow. One of the projects on my plate right now involves reconsidering the hosting for a relatively high volume client. I’m pretty well versed on the scalability and performance of various systems, but I haven’t yet dug too deep into containerization or container orchestration systems because I hadn’t had the need to. I’ve mostly been throwing money at additional hardware as a solution but the quote from a current vendor came back way too high. My proposal for alternatives ended up being services oriented around or managing hardware orchestrated6 by Kubernetes7 from the big three: Amazon Web Services, Microsoft Azure, and Google Cloud. I really don’t want to do any additional system administration or pay for managed services but I also want to avoid the technical debt8 of continually patching system and software upgrades. Although my bread and butter is in custom development, I still feel the need to disassemble these systems so that I have a solid understanding of the full stack.

I’m a late adopter. The idea of Infrastructure as Code9 and cloud orchestration systems has been gaining traction for a long time. I first dabbled with Chef and Puppet quite a number of years ago, but I’ve been mostly constrained to solo DevOps10 and the lack of appropriate production experience with them to have any real solid opinion one way or the other. My upgrades this year are reflecting a change in that process. I maintain quite a number of servers and applications running an assortment of programming languages and databases, many of which are running on different infrastructure. Instead of just trying to keep everything on standard Long Term Support(LTS)11 versions, I’ve been maintaining separate versions of languages and databases on my machines. I’ve decided that the best path going forward is to mitigate the extraneous annual work by using Docker files to create the various environments I need on the fly. This will also give me the ability to keep those instances inline with the upstream12 providers. Because those instances are virtualized, I’ll still maintain a local core of languages and databases in my ‘wheelhouse’. The minimalist purest in me still wants a few moving parts as possible and is opposed to what is essentially server middleware13, but the practical reality is that aside from the fact that I end up spending too much time with configuration. This is really just an extenuation the meaning of ‘convention over configuration’14. And although I’m still kinda sold on Ansible15 because of how simple it is and the fact that it doesn’t consume any hardware resources, containerization orchestration with Docker and Kubernetes is truly helpful with systems of scale.

I’ve now got at least a week of work invested into orchestration systems. I really enjoyed the ease of working with Rancher16. It’s just an outright impressive tool. These newfound skill sets are just enough for me to get what I need and I’m barely scraping the surface. It’s teaching me to focus on what I enjoy doing, which is building functional logic and features for applications. And although these skills really help me to fully understanding the stack, I don’t want to spend a quarter of my time with it because in the end, no one, especially the paying clients, cares how it’s running, just what it does and how well. I’ve been a loyal Linode customer for quite some time and I’m in on the beta of them spinning up a Kubernetes17 Engine. I’m sure to find some real world usage, learn some new skills, and gain a deeper understanding. I’m a bit skeptical of vendor lock in and I’ll wait another couple years to dive completely into the JamStack server-less computing18 trend. I see the value of a single language stack and infinite scaleability. I’m gearing up for it, but I’m still not entirely sold on the idea of purposely building software to capitalize on how the big cloud providers are dictating the use of dirt cheap CPUs or CDNs. Almost all of the ‘server-less’ vendors depend on Kubernetes to provide ‘Function as Service'(FasS)19. These hot ticket keywords are often misleading and confusing. There are still sysadmins in ‘server-less-land’. And although Rancher, Docker, and Kubernetes are essentially the current pinnacle of infrastructure technology, I’m not really in the business of providing cloud services. I’ll let everyone else work that out until a leading strategy emerges. I’m happy right here in the middle of the majority as a late adopter.

1) https://en.wikipedia.org/wiki/Diffusion_of_innovations

2) https://www.wikihow.com/Differentiate-Between-a-Real-Skater-and-a-Poser-Skater

3) https://en.wikipedia.org/wiki/Ruby_on_Rails

4) https://en.wikipedia.org/wiki/Homebrew_(package_management_software)

5) https://en.wikipedia.org/wiki/Docker_(software)

6) https://en.wikipedia.org/wiki/Orchestration_(computing)

7) https://en.wikipedia.org/wiki/Kubernetes

8) https://en.wikipedia.org/wiki/Technical_debt

9) https://en.wikipedia.org/wiki/Infrastructure_as_code

10) https://en.wikipedia.org/wiki/DevOps

11) https://en.wikipedia.org/wiki/Long-term_support

12) https://en.wikipedia.org/wiki/Upstream_(software_development)

13) https://en.wikipedia.org/wiki/Middleware

14) https://en.wikipedia.org/wiki/Convention_over_configuration

15) https://en.wikipedia.org/wiki/Ansible_(software)

16) https://en.wikipedia.org/wiki/Rancher_Labs

17) https://www.linode.com/products/kubernetes/

18) https://en.wikipedia.org/wiki/Serverless_computing

19) https://en.wikipedia.org/wiki/Function_as_a_service