ARM

I’m a bit of a late adopter1 so I generally let the dust settle before diving in. I just got my first Apple M12 device last week but I’ve been following the ARM architecture3 move relatively closely because I’m curious and my workflow depends on it. I bought an M1 iPad pro and I’m planning on switching out my main development computers this year after Apple releases a higher powered ARM chip now that the Linux Kernel v5.13 is fully supported. At this point, the higher powered chips are rumored to have between 10 and 32 core GPU options. I’d prefer to see a higher powered Mac Mini over a lower powered smaller form Mac Pro. My guess is that they’ll do both only offering the higher GPU core options on the later.

I always used one desktop and one laptop for my work. I’ve found myself a bit more tied to the desktop simply due to the monitor real estate and the laptop has been mostly reserved for the couch and travel. It’s important that I can replicate my develop environments across multiple devices and servers. Aside from graphics software, the vast majority of my work only depends on a couple text editors, several programming languages, some open source databases, a terminal, and a package manager. All of which are now officially supported by the ARM architecture in the M1 Macs. I’m looking forward to making the switch on both my local machines and my servers. Both Debian and Ubuntu now support 64-bit AArch ARM versions. The primary reason I got the iPad pro was for the touch screen and apple pencil knowing that the M1 now has enough power to handle the graphics software efficiently. Now that I’m not tied to a peripheral touch screen device strapped to my desk, I can do the graphics work wherever. Although I’ve bemoaned the iOS system for a while, I’ve started to include the iPad as a working device not only for graphics, but also communications, messages, emails, and documents. The magic keypad is backlit, so it’s perfect for lounging on the couch or in bed. I’ve come to really appreciate the simplified app versions of many applications while working on the iPad. The Adobe Suite products perform equally as well as their desktop versions running on my Intel i7 and i9 machines. Overall, the experience of working on the new M1 iPad is superb because of the weight, display, pencil, and performance.

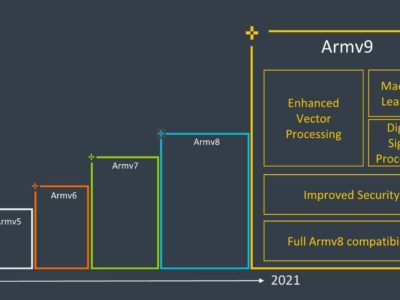

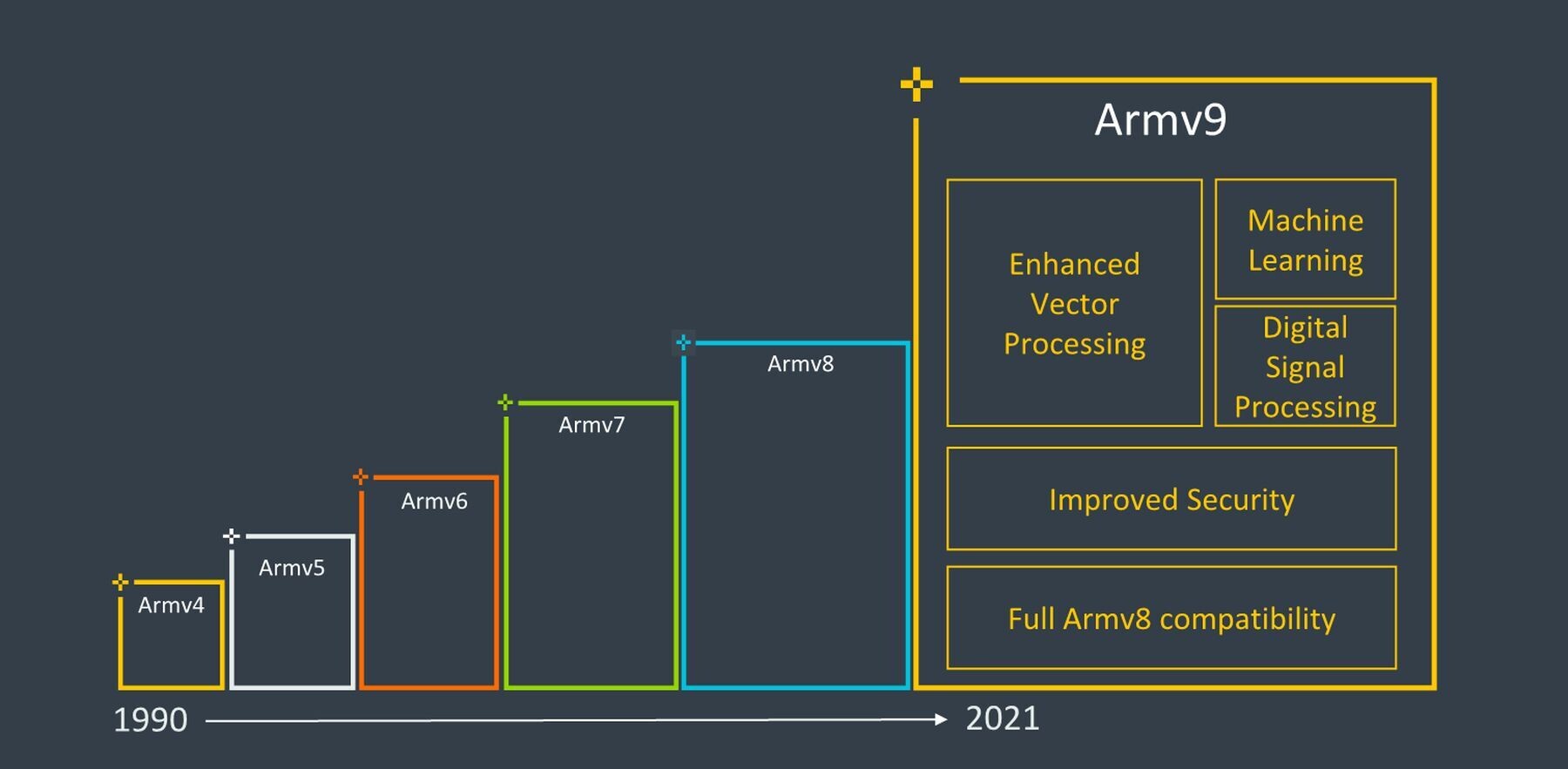

Anyone who’s taken note of the performance benchmarks or has used an M1 device knows exactly how energy efficient and performant they are. In the early 90’s I remember considering a Sun Microsystem ARM based desktop over an Intel Mac. I can’t remember why I was drawn to the SPARC system because I certainly didn’t understand ARM then. The M1 Mac Mini only draws 39 Watts at maximum load and I haven’t once felt my new iPad turn into a lap warmer. I’ve always just kinda suspected that ARM knew what they were doing simply because they were based in Cambridge. The old days of ARM just being in your Nokia phone or Nintendo Switch are over. Nvidia knows the deal. They spent $40 billion to acquire ARM Ltd last year4. ARM architecture now powers the worlds fastest super computer, the Japanese Fujitsu Fugaku running AAarch64 at 415 PetaFLOPs. It’s 2.8 times as fast as IBM Summit, it’s nearest competitor5. It all started with the rise in mobile computing, but it certainly helped when ARM opened up the instruction set a couple of years ago. I think Intel and other companies will eventually adopt the the open-source RISC-V chip standard developed at the University of California at Berkeley6.

A friend of mine who teaches statistics at a regional university told me one time that he’s avoided technology based on advice he received that it’s a continuous learning curve verses a relatively static field of knowledge. I like to point out that his field of expertise is now dominated by programming. For some reason, people just assume I know ALL technology and are always asking me tech questions that I have absolutely no clue about. Mostly relatives asking me why their phone battery is dead or which printer to buy. I usually just search for answers and pass it off as expertise. Some folks recently ask me for some advice about investing in technology companies recently to which I simple answered ‘ARM’. I think the energy efficiency and performance will expand the usage in almost every area of computing. R.I.P. x86, but please don’t short Intel or buy Fujitsu based on my take here.

So for now, I’m watching out to make sure all of my software dependencies are available for ARM. First to market is not always best and I’d prefer to be a bit of a late adopter and not adjust any of my workflow. MySQL beat MariaDB and RHEL beat Ubuntu to it. I’m prodding my favorite hosting company for adoption. Oracle just released hosted ARM powered servers based on Ampere chips7 and Amazon’s is using Neoverse cores for it’s ARM powered servers8. The video below is from a fella who happens to be in Charleston, South Carolina this week… because well, he lives mostly on a boat. You can follow him and his wife along over at https://mvdirona.com/9. In it he explains how much of the software on his boat is powered by ARM processors.

Although I understand much of the fundamentals, I’m still just searching up the references and passing it off as expertise. As long as my computers, software, servers, and web applications are performing well for cheap, I’m content on not digging any deeper into the fundamentals of CISC10 vs. RISC11. I’d guess that my new ARM lineup should last me about ten years. Perhaps chips will move from silicon to graphene by then. According to Moore’s law, obsolescence will eventually push us up against the limits to growth12 . Americans throw away out 400,000 phones daily13. We should consider the consequences. Personally, I think technology has the ability to create as many, if not more problems than it actually helps solve. I also don’t think we humans have the capacity to come up with any problems that will require 128-bit computing, so we’re certainly nearing those limits.

- Late Adopter – David A. Windham – https://davidawindham.com/late-adopter/

- Apple M1 – https://en.wikipedia.org/wiki/Apple_M1

- ARM architecture – https://en.wikipedia.org/wiki/ARM_architecture

- Nvidia to Acquire Arm for $40 Billion, Creating World’s Premier Computing Company for the Age of AI. – https://nvidianews.nvidia.com/news/nvidia-to-acquire-arm-for-40-billion-creating-worlds-premier-computing-company-for-the-age-of-ai/

- Fujistu Fugaku – https://en.wikipedia.org/wiki/Arm_Ltd.#Arm_supercomputers

- RISC-V – https://en.wikipedia.org/wiki/RISC-V

- ARM-based cloud computing is the next big thing: Introducing Arm on Oracle Cloud Infrastructure – https://blogs.oracle.com/cloud-infrastructure/arm-based-cloud-computing-is-the-next-big-thing-introducing-arm-on-oci

- AWS Graviton Processor – https://aws.amazon.com/ec2/graviton/

- Dirona Around the World – James Hamilton – https://mvdirona.com/

- CISC – Complex Instruction Set Computer – https://en.wikipedia.org/wiki/Complex_instruction_set_computer

- RISC – Reduced Instruction Set Computer – https://en.wikipedia.org/wiki/Reduced_instruction_set_computer

- Moore’s Law – Consequences – https://en.wikipedia.org/wiki/Moore%27s_law#Consequences

- U.S. Environmental Protection Agency – Cleaning up Electronic Waste – https://www.epa.gov/international-cooperation/cleaning-electronic-waste-e-waste